Econometrics is crucial for fields like data science and finance, involving a variety of models and testing methods. This guide outlines common interview questions, covering topics such as data types, regression models, hypothesis testing, time series analysis, and the distinction between fixed and random effects in panel data.

Econometrics

Ridge vs. OLS: Overcoming Multicollinearity Issues

Multicollinearity can undermine regression models by causing unstable coefficient estimates and inflated standard errors. Ridge regression addresses this issue by adding a penalty term to minimize coefficients, leading to more reliable predictions. While not suitable for all cases, it is particularly effective when predictors are correlated or outnumber observations.

Bayesian Inference vs. Maximum Likelihood Estimation: What’s the Difference, and Why Should You Care?

Bayesian inference and Maximum Likelihood Estimation (MLE) are key statistical methods for learning from data. MLE identifies parameters that maximize observed data likelihood, while Bayesian inference integrates prior beliefs with observed data, providing a distribution over possible parameters. Each method has unique strengths, with MLE being simpler and faster when data is plentiful.

GMM (Generalized Method of Moments) Made Simple

The Generalized Method of Moments (GMM) is an essential tool in econometrics for parameter estimation using moment conditions without assuming a specific data distribution. This blog explains GMM’s intuition, identification, estimation mechanics, and includes practical examples in R and Python, emphasizing its strengths and challenges in econometric analysis.

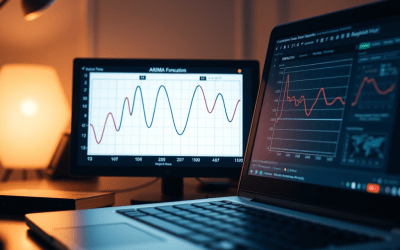

ARIMA Modeling: Steps to Accurate Time Series Forecasting

ARIMA, or AutoRegressive Integrated Moving Average, is a statistical method used for time series analysis and forecasting. It combines past values, past errors, and differencing for model stability. ARIMA is effective for univariate data, capturing trends and seasonality, and often surpassing simpler methods. Key steps include data preparation, visualization, stationarity checks, model fitting, and forecasting.

Standard Error Explained: Types & Formulas

The Standard Error (SE) measures the variability of a sample statistic relative to the true population parameter, helping assess accuracy in estimates like the mean, regression coefficients, or proportions. Various types, including the Standard Error of the Mean (SEM) and robust standard errors, account for reliability and data irregularities in statistical analysis.

Key Concepts in Theory of Probability Explained Simply

The content outlines key concepts in probability and random experiments, including randomness, mutually exclusive and exhaustive events, classical probability, conditional probability, and foundational theorems such as Bayes’ Theorem and the Law of Large Numbers. Each concept is illustrated with examples, emphasizing their significance in understanding probability theory.

Logistic Regression Explained: Key Concepts and Tests

Logistic regression predicts binary outcomes based on predictor variables using the odds ratio and CDFs, such as the logistic function. It requires a non-linear model, utilizing MLE for estimation. Key metrics include Hosmer-Lemeshow Test, WOE, and PSI for model validation, ensuring good-bad separation and stability in populations.

Understanding Panel Data: Definition, Types, and Benefits

Panel data integrates both cross-sectional and time-series elements, comprising balanced and unbalanced types. It offers advantages in regression modeling by addressing omitted variable issues and enhancing data quality and quantity. This combination enables better understanding of dynamic behaviors over time, providing insights that neither cross-sectional nor time-series data can offer alone.

6 Effective Tests for Normal Distribution

Normality refers to a property of random variables adhering to a normal distribution, depicted as a bell curve. This assumption is critical for various statistical tests and hypothesis evaluations. Multiple methods, including visual and statistical tests, are employed to assess normality. Understanding normality impacts data analysis reliability and interpretation.